Your go-to user research platform

The best teams use Lyssna so they can deeply understand their audience and move in the right direction — faster.

No credit card required

All your research in one place

The only platform you’ll need to uncover user insights

How it works

Bring the voice of your audience into every decision.

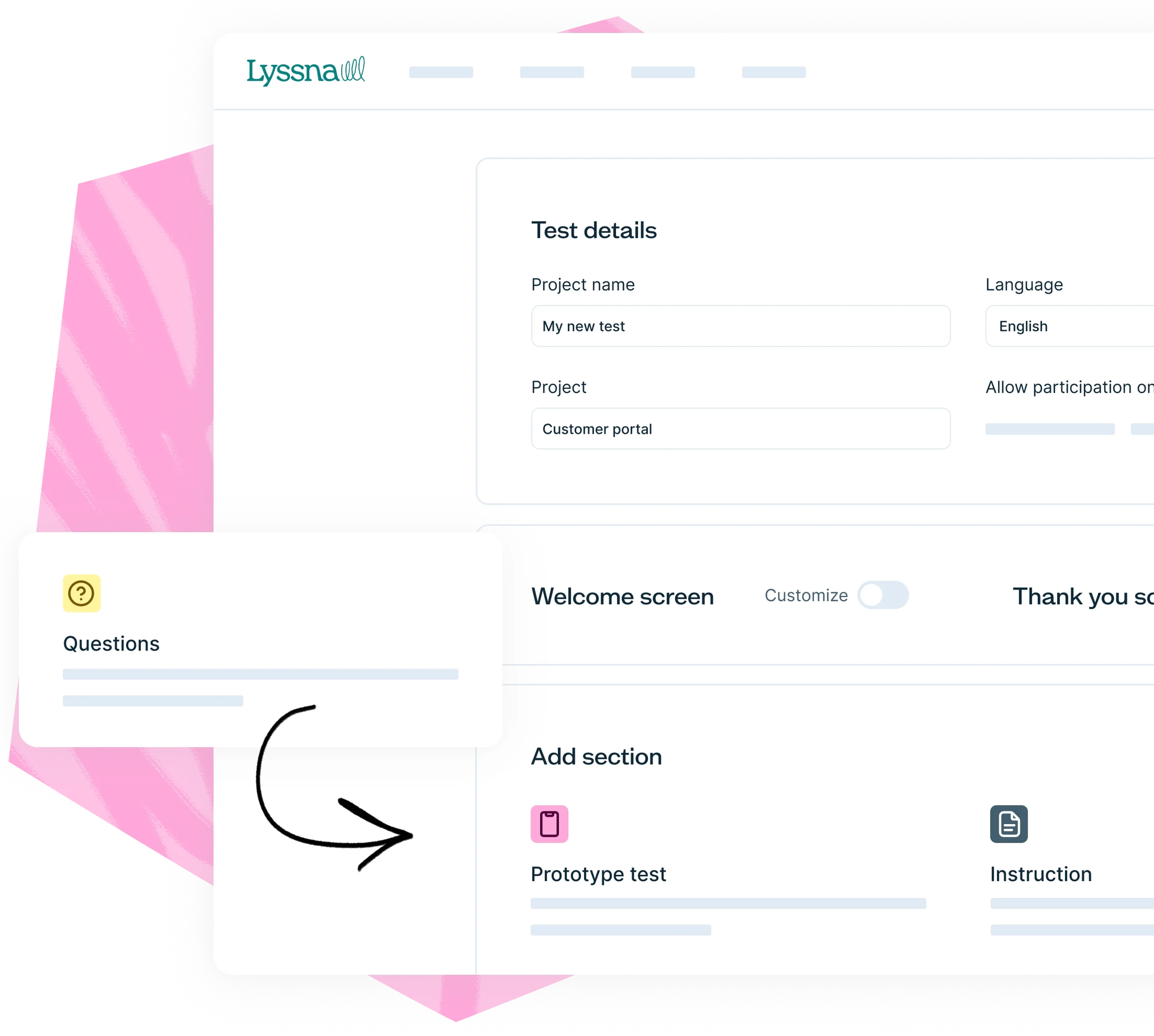

Create a study

Create a range of usability tests using a wide variety of methodologies, or set up user interviews.

Recruit participants

Discover valuable insights

Get started with a template

Explore our template library to discover a wide range of pre-designed templates

Marketing

“We used to spend days collecting the data we can now get in an hour with Lyssna. We're able to get a sneak preview of our campaigns' performance before they even go live.”

Aaron Shishler,

Copywriter Team Lead at monday.com

Try for free today

Join over 320,000+ marketers, designers, researchers, and product leaders who use Lyssna to make data-driven decisions.

No credit card required